A paradigm shift in virtualisation – Containers

12 billion Container image pulls (DockerCon 2017) and counting – it is no wonder Containerisation and virtualisation are hot topics in the industry today. There has been a large-scale adoption of Containers by large businesses across many verticals and governments as evidenced by 390,000% growth in Docker Hub image pulls in just three years. Datadog’s user analysis suggests that an average company quintuples its Docker usage within 9 months.

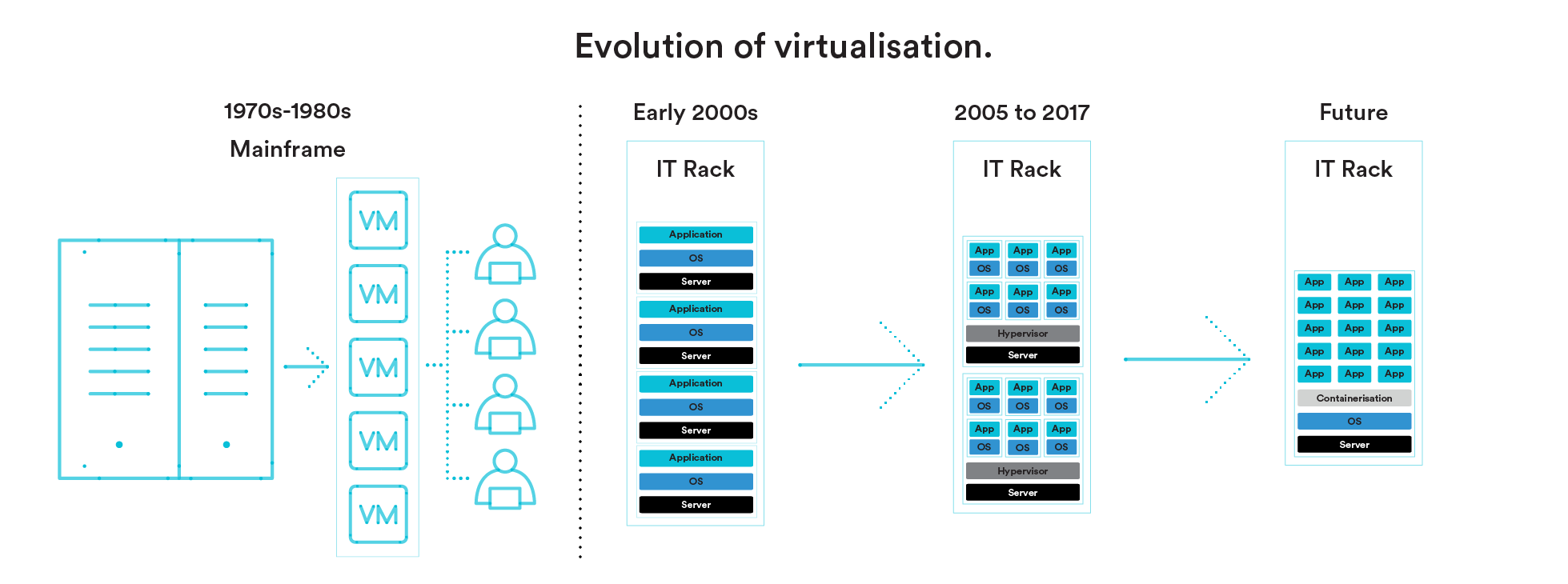

Before we discuss why Containerisation has garnered so much hype for virtualisation, what they are and the benefits they bring, it is appropriate to look at how Containerisation came about. To do that I want to take you back to where it all started.

History of virtualisation.

Let us rewind to the 1970/80’s, before microcomputers or Personal Computers (PCs) were the norm, when someone mentioned the word computers, they usually meant minicomputers or mainframes. Those computers were very expensive machines located in sterile facilities and were afforded only by a few businesses and well-funded universities. As these expensive machines were not utilised to their full capacities, researchers started creating models to improve system utilisation and therefore better ROI. “Batch processing” was soon employed as a way of sequentially queueing and feeding application code into the computers – a conveyor belt for code execution to utilise unused compute cycles. While this dramatically improved resource utilisation, it did not cater for concurrent, multi-tenant compute utilisation.

Rigorous pursuit of a concurrent user utilisation model led to the development of “Time Sharing”, which enabled multiple users to work on the same system simultaneously and independent of each other thus improving system utilisation. Time Sharing essentially was the beginning of the modern day virtualisation. In 1972, IBM introduced its first Virtual Machine Operating System to virtualise its mainframes allowing them to fully virtualise all I/O and other operations. Each user had their own environment, address space, virtual devices and could run their own application. The idea behind this was pretty much the same as today – to save compute costs to an end user by utilising the unused resources of an expensive and underused compute platform.

IBM’s Virtual Machines (VMs) allowed its mainframes to be more efficient and better managed, driving significant improvements to ROI.

The cloud services virtualisation has shifted dramatically over the last few years. And the trend looks to be continuing into the future.

Dot-Com era server growth.

As the online phenomenon exploded in the 2000s, the growth in servers followed, placing a heavy burden on data centres and IT budgets.

During this period, every application ran on its own dedicated x86 server or servers. However, most of these servers were barely utilised and had a lot of free time. It was not uncommon to see servers running at less than 15% capacity most of the time. Soon after, IT leaders started to notice that this was a very costly way of operating the IT environment. So researchers started to work on developing commercially feasible ways of virtualising x86 server infrastructure.

In 2001, VMware launched the first commercial server virtualisation products VMware GSX Server (hosted) and VMware ESX Server (hostless). VMware ESX allowed x86 servers to be virtualised similar to what IBM’s VM did to its mainframes.

Soon, enterprises could virtualise their servers, leading to large scale consolidation of physical servers in the data cetres into VMs, thus drastically reducing server counts, resulting in significant cost savings.

This was the dawn of cloud computing. When we talk about cloud computing we are referring to server virtualisation, which has since grown exponentially into a multibillion dollar industry as we know it today.

What is the problem with VMs then?

If you thought VMs solved all the problems of expensive server sprawl and reduced TCO – you are correct. The proof is in its current success and popularity. However, VMs, despite all of their advantages, can be very resource hungry. The hypervisor consumes significant resources as it runs a full copy of the guest OS and virtual copy of all hardware needed to run. There is also unnecessary redundancy because of the kernel and supporting virtualised resources consumed by OS, which leads to bloating.

A VM runs a dedicated operating system on shared physical hardware resources such as CPU, memory and storage. With the advent of public cloud hyper-scalers and their user-friendly GUI’s, it became very easy for users to spin up hundreds or thousands of VMs.

In addition, during the latter part of 2000s, we saw the genesis of DevOps, a new software development and delivery culture. This along with the Agile methodology, introduced rapid development, integration, testing and deployment of application workloads at velocities that the industry had never seen before. Each user along the software development life cycle -developer, tester, etc. was able to spin up VMs at will. The hyper-scalers have made it super simple to consume compute resources via API and their supported portals, and DevOps tooling. Since the hyper-scaler handles the management of the supporting hypervisor and hardware, users can be excused for becoming complacent, optimising less and consuming more.

Due to these reasons, we are now experiencing a VM sprawl, similar to the server sprawl a decade earlier. This has led to businesses experiencing “bill shocks” and increased IT budget concerns.

What is the solution to a VM sprawl?

The solution to the problem of VM sprawl is Containerization. Often the compute resources (CPU, RAM and storage) consumed by a code or the application is dwarfed by the operating system in a VM. Hence, one may ask why use an entire VM with its own OS, kernel and I/O for small applications?

Containers are like VMs with an isolated, discrete and separate space for applications to execute in memory, storage to reside and provide the appearance of an individual system to enable each Container to have its own sysadmins and groups of users. They have sufficient resources enabling you to run an individual application with own filesystems and separate processes.

Containers allow generation of a layered filesystem that allows you to leverage a shared OS host kernel, minimising overall resource consumption – constrained only to the files and resources required to run a single process/workload.

Containerisation separates the applications from individual hosts so that the applications can be moved around flexibly. Similar to a hypervisor that creates and runs a VM, a Containerisation platform creates and runs a Container. Today the most popular platform (as supported by the hyper-scalers) is Docker (hence the use of Docker statistics in the introduction).

Containerisation provides a repeatable and reliable process for cloud native application releases enabling continuous software delivery. This has fantastic benefits to the business.

Containers virtualise the operating system itself. By virtualising a single host operating system developers now can run hundreds of Containers using a significantly smaller footprint in comparison to a VM.

Containers can significantly improve a business’ server infrastructure efficiency leading to cost savings.

Benefits of containers.

Simplified System Administration – Containers on a single host OS use just one instance of OS. Hence all containers will have the same version of OS. When Sys Admins run patches on the host OS, all the containers are patched by default.

Activation velocity – Containers are much quicker to create and run compared to VMs. Also, they can be turned down rapidly. The average lifespan of a Container is 2.5 days while average life of VMs is 23 days. Additional Containers can be spawned in microseconds, compared to minutes for VMs. This is because there is no need to install and configure an OS for each Container and set up databases as we do with a VM. Nor is there a need to boot a Container. The benefits are clear for DevOps teams that have multiple build, test and release cycles every day.

Cost saving – Containers are more efficient in terms of CPU, Memory and Storage. They also save on the OS related costs. Containers only take up a few MBs as they share host OS kernel, binaries and libraries. Overall on a physical server, one can create many – 2x to 3x more Containers than VMs.

Portability – Containers encapsulate everything needed to run an application to ensure it runs the same manner wherever it is hosted. Runs the same on a laptop as it does in the cloud. Containers are more efficient to move legacy applications with minimal risk. The portability extends beyond the host to OS level as Containers operate seamlessly on multiple OS’s. As majority of enterprises are using multi-cloud or hybrid cloud models, Containers enable simpler porting of their applications between multi-cloud platforms.

Loosely coupled, distributed, elastic, liberated micro-services – In the world of Containers, applications are broken into smaller, independent pieces and can be deployed and managed dynamically – not a fat monolithic stack running on one big single-purpose machine.

Reliability and Scalability – Containers can be clustered for high availability, horizontal scalability and self-healing in case of failure.

In conclusion, Containers are to VMs what VMs were to physical servers – Containers will deliver much higher compute densities, velocity, scalability and flexibility. The adoption of this virtualisation technology has spread from DevOps to mainstream production applications and can drive overall reduction in the cost of cloud computing and improved TCO. As your organisation undertakes its journey through the DevOps culture and adopts automated CI-CD toolset, you will be drawn into this world of virtualisation and begin to see the benefits that Containers can deliver.

If you need help with your journey to the new world of Containers, whether it is about selecting the right platform or adopting Containers for your DevOps or production systems, contact us at Macquarie Cloud Services as we have the people with the expertise and enthusiasm to make your journey enjoyable and successful.